Understanding artificial neural networks

Today, we started from the same ribosomes and finate state transducers we talked about last time, and saw how even though they follow simple rules, they allow for highly complex function. We saw how more complex and more abstracted things (computers, brains) are built out of neural and metal circuits, neurons/logic gates, proteins/IC wafers, amino acids/silicon. and atoms, and how computers additionally have levels of abstraction of applications, higher-level programs, operating system, assembler code, etc, over the circuits. Ribosomes translate between levels of complexity (nucleotides to proteins), compilers which are more complex but similar to finate state transducers translate between levels of abstraction.

We then looked at a specific abstract: the artificial neural network. In order to understand it, we started with biological neurons, their anatomy, and their principles. We considered how artificial neurons work the same way, with presynaptic inputs, weights for each synapse (corresponding to postsynaptic receptor density) which can activate or not activate the postsynaptic neuron.

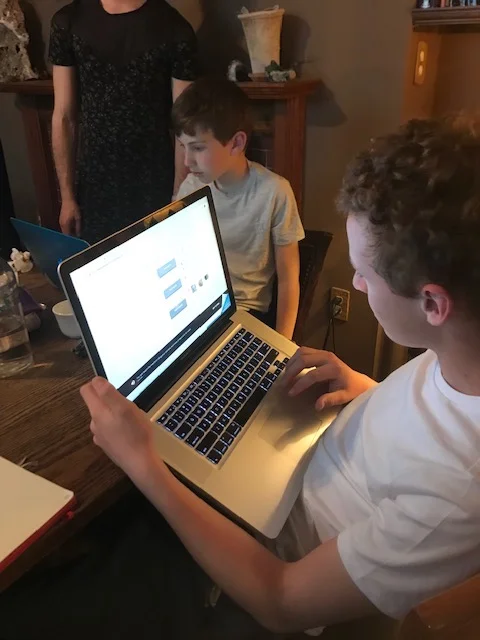

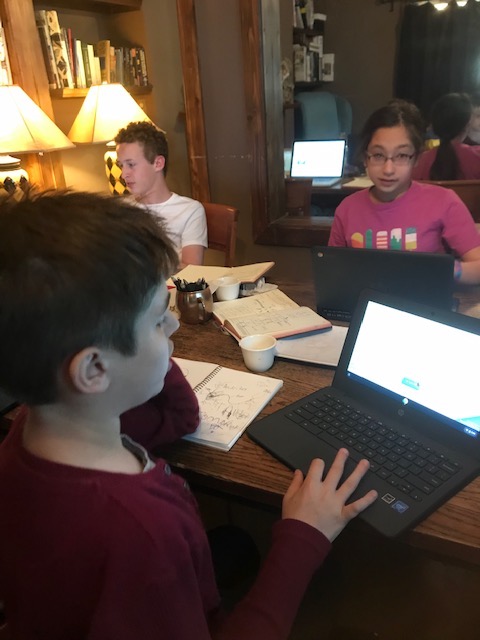

We then tried training a neural network to recognize a objects at teachablemachine.withgoogle.com, and we saw some of its dynamics, the concept of a classification problem, what sorts of mistakes it would make, how it depended on a sufficiently large training set, the idea of confidence in one classification over another, and what it did with noisy training sets.

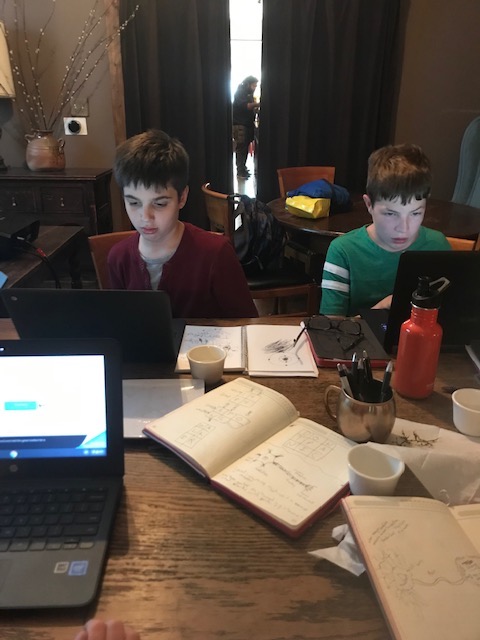

Finally, some of tried using playground.tensorflow.org, which lets you configure the hyperparameters of a neural network in various ways with four different types of training sets. We used this to start talking about backpropegation and how the weights are updated, and to understand that simple networks could perform linear classifications quite robustly, but for complex non-linear or noisy data sets larger "deep" networks (with more hidden layers) were needed to "understand" the concept of a circle or spiral.